White Paper NESS

Objectives of NESS :

NESS is a sound spatialization software. It allows the recreation of a coherent sound scene over a large listening area (absence of sweet spot) using a playback system (set of loudspeakers) by placing virtual sound objects in a given location. NESS can spatialize up to 16 sound sources on 4 groups of speakers for a total of 32 outputs. NESS also includes a reverberation engine to synthesize coherent acoustic spaces and tools for managing source movement and trajectories.

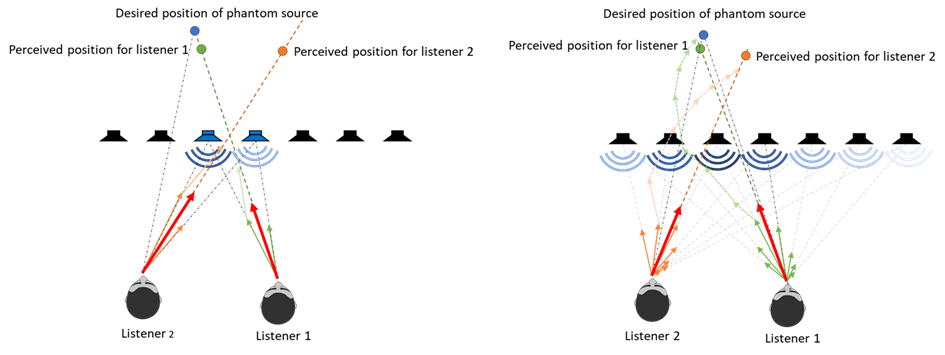

Perceived localization of a sound source on a set of loudspeakers

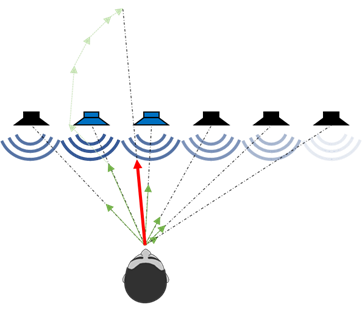

When the same signal arrives from different directions to our ears with arrival times of less than 30ms, our brain interprets these signals as coming from a single sound source. The perceived direction of this merged source depends on the amplitudes and delays heard from each speaker. The sound source will generally be perceived in the direction of the loudspeaker heard first (precedence effect) or the loudest loudspeakers (energy vector model). The contribution of all speakers can be analyzed using psychoacoustic models to predict the perceived direction of the source [1].

In the figure, the two loudspeakers that contribute the most (the closest or the loudest) are indicated in blue. The contribution of each speaker is represented by the length of the green arrow in its direction (energy vector). By summing the vectors, we can model the perceived red direction of the sound source.

The NESS algorithms are chosen to minimize the sound source localization error for a large listening area.

Existing spatialization methods

The existing methods of sound spatialization are numerous. Here is a comparison of the most common methods:

VBAP

Principle

VBAP (vector based amplitude panning) generalizes the amplitude panning used in stereo mixing to a loudspeaker array. Only the loudspeakers that are in the direction of the source from the point of view of the listening position broadcast the signal of the source. The gains associated with each loudspeaker are calculated as a function of the listening position, the position of the loudspeakers and the position of the virtual source on the principle of summation of energy vectors. [2]

Advantages

- Very good rendering of the angular localization at the listening position

- Low algorithmic complexity

Disadvantages

- The positions of the loudspeakers must be equidistant from the listener, and is often standardized, therefore difficult to reproduce according to the installation constraints.

- Each loudspeaker must cover the entire listening area, at the risk that certain sources are not audible at certain positions.

- Presence of a sweet spot: spatialization is only valid at the listening position for which it has been set.

DBAP

Principle

DBAP (distance based amplitude panning) spatializes a sound source by calculating the acoustic decay related to the distance between the virtual sound source and each speaker. The position of the listener is not taken into account, and all the speakers play the signal [3].

Advantages:

- No sweet spot, spatialization is better perceived for a large listening area

- Adaptable to irregular speaker topologies

- Source signal audible on all speakers

Disadvantages:

- Limited performance near the speakers, distortion of the soundstage due to the precedence effect.

- Requires more CPU resources

Comparison of localization error for VBAP (left) and DBAP (right)

WFS

Principle

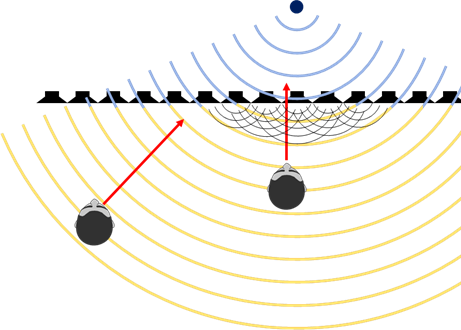

WFS (wave field synthesis) is based on the Huygens-Fresnel principle, which states that any sound wavefront can be decomposed into a superposition of elementary sound waves. The use of a large number of loudspeakers as elementary sources thus makes it possible to reconstruct a wavefront. We can therefore spatialize sound sources by recreating the wavefronts corresponding to the desired position of the virtual source. In practice, this is done by calculating the delays and attenuations due to acoustic propagation between the virtual source and the loudspeakers for the sources located behind the loudspeakers. The calculations are then close to the DBAP to which we add delays. The WFS principle can be extended to create wavefronts for sources located in front of the loudspeakers, in the listening area. [4]

Advantages

- Sound spatialization preserved in the entire listening area

- The sound field is faithfully recreated

- Possibility to spatialize sounds inside the listening area

- The effect of precedence disappears

Disadvantages

- Requires a large number of speakers, close to each other

- Very heavy calculations due to the large number of audio channels

- Very expensive and not very portable

- Interference at higher or lower frequencies depending on the distance between the speakers.

NESS

Implemented principles

The spatialization algorithms of NESS implement the principles of WFS. The gains for each loudspeaker are calculated according to the principles of DBAP [3] to which delays are added according to the WFS principle. The addition of delays allows to reinforce the spatialization and the focus of the sound sources by playing on the precedence effect. The perception models in extended energy vectors allow to prove the significant reduction of the localization error and the perceived width of the source [5] [1]. DBAP also allows the use of irregularly placed loudspeakers.

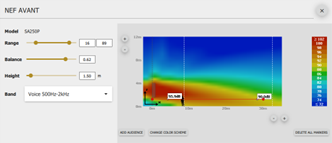

Adaptation to loudspeaker configuration.

The use of the "blur" and "rolloff" parameters described in the DBAP algorithms[3] allows us to ensure a reproduction of sources without artifacts depending on their relative positions to the loudspeakers.

The "blur" parameter allows the smoothing of gain variations when the sources are close to the loudspeakers, or when the distance between the loudspeakers is important, by adding a greater or lesser offset in the distance calculation.

The "rolloff" setting allows you to intensify or soften the panning effect by playing on the acoustic decay law as a function of distance. A higher rolloff allows to increase the gain differences between the loudspeakers on modest size configurations.

Many functions derived from perception models also allow to play on the perceived width of the source, the variation of intensity with distance.

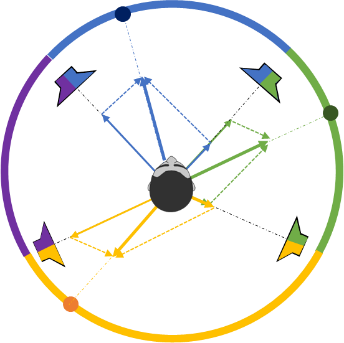

Figure 1: comparison of perceived directions of arrival and width of a phantom source with NESS algorithm, with and without the use of delays

Reverb Engine

The NESS reverb engine is built on the principles of an active acoustic reflector [6]. A set of 4 microphones picks up the virtual sound sources, feeds them to 4 reverb buses where they are convolved to 4 impulse responses. The convoluted signals are then respatialized to all the loudspeakers. When the virtual microphones are positioned far away (at the back for a frontal scene, all around for a surround scene), the sources moving away from the loudspeakers get closer to the microphones, the reverberated signal is reinforced for these sources, creating the distance effect. The method is illustrated on the wfs-diy.net blog

Motion Management

NESS allows you to edit the movements of each source using a trajectory editor. The trajectory allows you to control both the position and the speed of the sound object. As each segment of the trajectory is covered by the object with the same duration, when two points of the trajectory are brought closer together, the speed decreases and vice versa [7].

During source movements, the calculated delays for each speaker vary in time, which can create a Doppler effect. The use of interpolated delay lines and the smoothing of these delays by a 2nd order filter ensure the absence of clicks and a significant reduction of the Doppler effect. The delay smoothing time constant can be set in the spatialization parameters.

Bibliography

[1] E. Kurz, « Efficient prediction of the listening area for plausible reproduction », p. 98.

[2] V. Pulkki, « Spatial sound generation and perception by amplitude panning techniques », Helsinki University of Technology, Espoo, 2001.

[3] T. Lossius, « DBAP - Distance-Based Amplitude Panning », p. 5.

[4] A. J. Berkhout, « A Holographic Approach to Acoustic Control », J. Audio Eng. Soc, vol. 36, no 12, p. 977‑995, 1988.

[5] J. C. Middlebrooks, « Sound localization », in Handbook of Clinical Neurology, vol. 129, Elsevier, 2015, p. 99‑116. doi: 10.1016/B978-0-444-62630-1.00006-8.

[6] X. Meynial, « Reflecteur sonore actif EP1211668A1.pdf », Reflecteur sonore actif, 11 2001.

Please fill in the form below to download the file